From Quantum Hype to Quantum Utility: Packaging QPUs for Real Workloads

Microsoft Azure uses logical qubits, cloud HPC, and AI to solve problems in chemistry.

Credit: Microsoft

A Plain-English Guide to Putting QPUs Inside Real Workflows

For years, quantum stories revolved around “supremacy” demos and lab benchmarks. Useful to scientists, sure—but hard for a CIO or product team to buy. This piece translates that world into plain English and a practical question: how do you package a quantum processing unit (QPU)—the quantum chip—so it contributes reliable compute inside a normal workflow? IBM calls that outcome quantum utility: when a quantum system can deliver reliable results at scales where brute-force classical methods stop being practical.

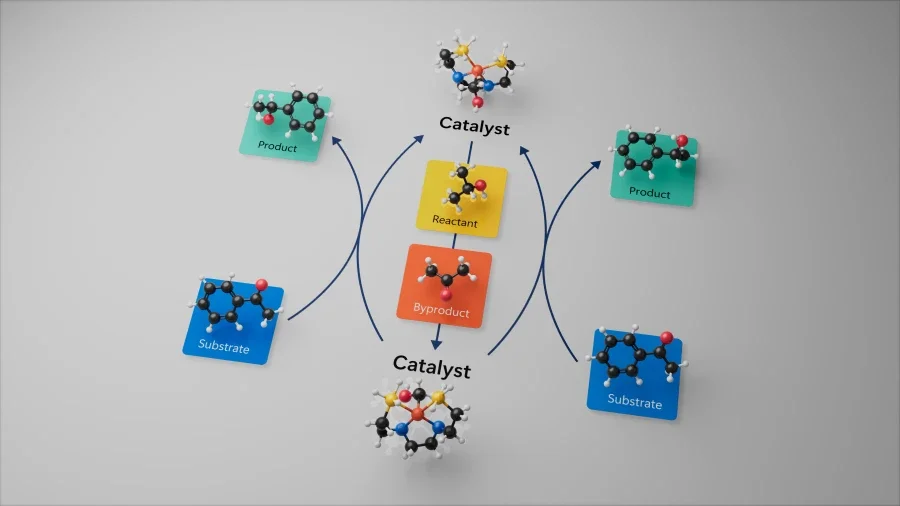

Treat Quantum As A Hybrid Service, Not A Replacement

Today, you don’t “switch to quantum.” You attach quantum to classical software, calling the QPU for specific sub-tasks (like certain sampling, optimisation, or chemistry steps) and doing everything else on CPUs/GPUs. Cloud platforms already package this: Amazon Braket Hybrid Jobs spins up classical resources, queues QPU time, then tears everything down so you pay only for what you use; Microsoft’s Azure Quantum Elements bundles AI and HPC tools around chemistry/materials pipelines with optional quantum calls. In practice, quantum is a managed plug-in, not a new data centre.

The Jargon, Decoded (And Why It Matters)

Qubit. The basic data unit on a QPU. It can occupy combinations of “0” and “1,” which lets certain algorithms explore structured spaces efficiently—but qubits are fragile and “noisy”, so we engineer around errors.

Transpilation. Every device has its own “accent.” Transpilation rewrites your circuit to match that device’s layout and to keep it as short and robust as possible. Modern toolchains do this automatically so you can focus on the problem, not the plumbing.

Error suppression/mitigation. Think “noise-cancelling” for circuits: techniques applied during and after runs to clean up results on today’s imperfect hardware—without full error correction. Vendors expose these as simple runtime settings so you don’t reinvent them for each job.

Logical vs physical qubits. True fault tolerance encodes one logical qubit into many physical ones. The key test is operating below the threshold where making the code larger reduces the error rate. That moved from theory to data in 2023 with Google’s surface-code experiment—early evidence that scaling can improve reliability.

Primitives. Standard API calls (for example, Sampler and Estimator) that bundle transpilation, error-handling and execution so quantum steps are repeatable and auditable inside enterprise workflows.

Where Quantum Helps First (Without Hype)

You don’t need “faster than every supercomputer” to be useful. Utility shows up when hybrid pipelines deliver better answers or better economics under a clear metric. Examples:

Chemistry & materials: using a QPU to estimate certain molecular energies more efficiently inside an AI/HPC workflow—narrowing candidates before costly lab work. Azure’s stack is being built exactly for that integration. An AI/HPC workflow is a data-and-compute pipeline that blends AI (machine-learning models) with HPC (high-performance computing: big simulations/optimisation on clusters). Think of it as AI for the pattern-finding parts and HPC for the heavy physics or large-scale maths—wired together so each step feeds the next.

Optimisation & sampling: calling quantum routines to explore tough landscapes (e.g., scheduling or portfolio sub-steps) and feeding the result back to a classical solver. Braket’s hybrid orchestration is designed for these end-to-end runs. It is Amazon’s managed runtime that runs your classical code alongside priority access to a chosen QPU, moves data between them during execution, and captures configurations and logs—so the entire quantum–classical loop runs as one auditable job.

The operational rule is designed to be simple: version your circuits, record your transpilation and error-mitigation settings, and always keep a classical baseline so gains are measurable and reproducible.

How To Explain This To Leadership

Boards now ask two questions at once: “Can we try quantum safely?” and “Are we migrating our crypto?” Treat them as twin tracks.

Track A — Explore with guardrails. Run pilots where the QPU is a contained service inside a workflow you already operate. Use managed runtimes with explicit error-handling options and publish acceptance tests (e.g., “quantum step must match a classical reference within tolerance on these control instances”). This keeps experiments accountable.

Track B — Migrate your encryption in parallel. Even if you never use a QPU, you should adopt post-quantum cryptography (PQC). In August 2024, NIST approved three PQC standards (FIPS 203/204/205). In March 2025, the UK’s NCSC published a timeline: catalogue critical systems by 2028, prioritise upgrades by 2031, and complete migration by 2035. This is a security programme you can brief to audit and risk committees today.

Packaging For Real Workloads (A Buyer’s View)

Workflow, not widgets. Show exactly where the QPU sits: pre-processing in, post-processing out. Hybrid services like Braket and managed chemistry stacks on Azure make this orchestration concrete and auditable.

Make errors boring. In proposals and docs, use plain English: “We reduce noise during runs and correct for it afterwards; when we use error-corrected qubits later, reliability improves as we scale.” Back this with vendor runtime docs and peer-reviewed error-correction results.

Standard API calls. Build around primitives so teams don’t hand-roll device specifics. It keeps results stable when hardware or compilers change and makes audits faster.

Productise the experience. Offer QPU credits, SLAs for queue times and upgrades, and clear comms when devices change (just like GPU generations). Braket’s hybrid job queues are a helpful precedent for predictable turnaround.

Governance artefacts. Keep runbooks, versioned circuits, and a changelog of transpiler and mitigation settings. If a result shifts after a platform update, buyers should see exactly why.

Plain-English FAQ (For Stakeholders Who Don’t Live In Qubits)

Will we own a quantum computer? No. You’ll rent time in the cloud and call the QPU only where it helps—wrapped inside your normal software.

Isn’t the hardware too noisy? That’s why we use suppression/mitigation today and track the emergence of logical qubits operating below the error threshold. We get cleaner answers now, with a path to fault tolerance later.

What value should we expect this year? Faster screening in materials and chemistry, better heuristics in certain optimisations, and clarity about where quantum doesn’t help yet. Success looks like a governed pipeline with a few meaningful metrics moving.

What about security while we experiment? PQC migration runs in parallel—adopt NIST’s standards and follow the NCSC timeline so today’s sensitive data stays safe for the long term.

What To Watch Next

Expect steadier progress in managed runtimes (more built-in mitigation, simpler primitives), plus continued demonstrations that logical qubits improve with scale. Together, these make quantum a credible plug-in to mainstream software rather than a detour to a lab. That is how hype turns into utility—one governed, hybrid workload at a time.